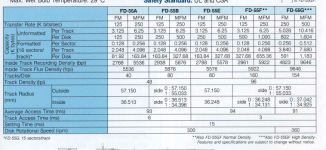

Those values in the table are just standard values for given data rates, modulation, and rotational speed. They don't talk about the

real capacity of a drive, no holds barred. Anyone who's written a formatter is very familiar with them--they're needed to calculate inter-sector gaps.

Recall that a floppy disk system is very imperfect. The mechanism has to be tolerant of variations in vendor media (i.e. the goop smeared on the cookie) and the drive speed regulation itself. Tossing all that aside, suppose we talk about raw track capacity--one sector per track?

One thing that has to be considered is the fact that the read channel is very much analog in nature. Consider, for example, the iconic

MC3470 read amplifier. Note that there's a low-pass filter on the thing to reduce the effects of high-frequency noise. You can adjust that to allow for a somewhat higher data rate, all other factors permitting. We did that with Tandon 100 tpi drives on our system and we could fit 12 sectors of 512 bytes on a DD 5.25" disk, using GCR--the downside is that the setup is a little more finicky when it comes to media.

And there are still surprises. I took that same system, attached a HD 3.5" drive and found that HD drives using new HD media at the somewhat higher data rate didn't work at all. On the other hand, finding a DD floppy that worked was extremely hit or miss (and I have a lot of new media to check that out). Oddly, HD media, even the pretty ratty stuff, worked if I taped over the media sense hole. One of these days, I'll haul out the 'scope and figure why that works. I suspect that the HD mode employs a bandpass filter with a specific "sweet spot".

One has undoubtedly written several books on the subject.