Chuck(G)

25k Member

Personally, I think a chance to change horses for IBM and the PC community was lost when the Power architecture came out. Still very much alive; some of these chips have 18 cores...

From now on the only acceptable replies to this thread are unsigned integers directly responding to the question "So how many cores are enough these days?".

2.

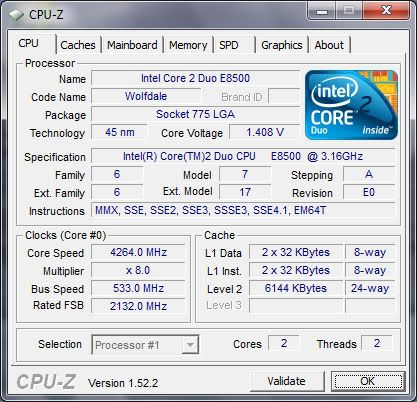

Heh. I chose "two" partially tongue-in-cheek, partially because at this point I'd say a that the early Core2Duo machines sit just above the "too slow" line for general-purpose computing; IE, while I as a geek can easily find good uses for older machines a C2D is about the slowest thing I'd feel comfortable, say, handing to a computer-phobic elderly relative and setting them loose on YouTube, FaceBook, etc.

A real geek should be overclocking the nuts off it

C2Q on Asus P5N-D is the way to go i think, side by side with a brand new I7, and there literally isn't much difference, until i feed either one a solid state drive, then the difference is very noticeable. And yes, a true geek overclocks the pants of his computers.

I'll chime in: Because some applications don't play nice with the system, you must have a bare minimum of 2. A realistic number given what you get on a typical web page these days is 4 cores, so that the loading and rendering threads are on their own metal.

Because I do multimedia editing and compression, I can never have enough cores. I've been running a Core i7 920 with hyperthreading turned on (4 cores, total of 8 hardware threads) overclocked to 3.2GHz for almost 7 years. I'm waiting until 6- or 8-core desktop Intel CPUs are in my price range before I upgrade again.

Well, hyperthreading reduces the performance of a core, so unless you need the parallellism it'll give less performance.. so for my very demanding single-threaded processing I have hyperthreading switched off on the big server.

-Tor

Of course not. Only when your usage fits the case where you need maximum single thread performance, e.g. the job you need done cannot be be parallelized and you need maximum performance (process the data in the shortest amount of time). As the case I described in what you quoted.So all of us with 2 or 4 core cpus with hyperthreading, can see an improvement in disabling such?