Hello!

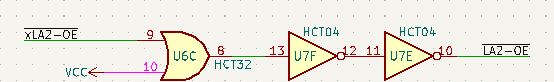

I'm coming to this thread because I have a problem related to

XTIDE on a board I've designed from

Monotech's XT-IDE-Deluxe, but I'm still not sure if it's a design problem or a bug with

XTIDE's BIOS. To give you some context, the card is as follows:

Details of it can be found on the

product page of my Tindie shop, although it is not available for purchase at the moment.

You can find the schematic

here.

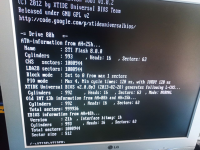

Although the idea of the product is based on an

SD to

IDE card adapter, I wanted to rule out any incompatibility problem with it, so the tests, with the same results, are being carried out with a

CF to

IDE card adapter, and a

512Mb card that I have previously verified that it works perfectly with an

XTCF based card and the corresponding

XTIDE BIOS:

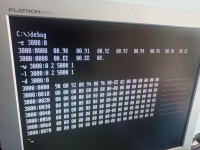

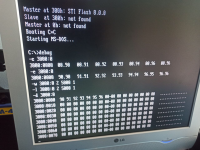

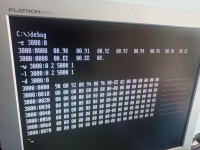

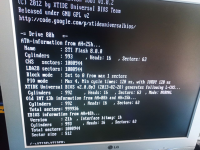

Having detailed the context, I will now explain the problem I have. What happens is that during writing, half of the bytes are not written properly, normally it is

00, instead of the byte that corresponds to it... although I have noticed that if the previous byte is

EE, instead of being

00, it is

02... but I think a debug image is worth a thousand words, as it is self-descriptive:

At first I had thought that it was a problem with the design of the card, but what I find most strange, if that were the reason, is why it only happens when writing? the reading is always correct, it reads the content that is actually recorded in the sectors. These debug commands make use of interrupt

13h corresponding to read and write respectively (

AH = 02 and

03):

Code:

; Project name : XTIDE Universal BIOS

; Description : Int 13h function AH=3h, Write Disk Sectors.

;

; XTIDE Universal BIOS and Associated Tools

; Copyright (C) 2009-2010 by Tomi Tilli, 2011-2013 by XTIDE Universal BIOS Team.

;

; This program is free software; you can redistribute it and/or modify

; it under the terms of the GNU General Public License as published by

; the Free Software Foundation; either version 2 of the License, or

; (at your option) any later version.

;

; This program is distributed in the hope that it will be useful,

; but WITHOUT ANY WARRANTY; without even the implied warranty of

; MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

; GNU General Public License for more details.

; Visit http://www.gnu.org/licenses/old-licenses/gpl-2.0.html

;

; Section containing code

SECTION .text

;--------------------------------------------------------------------

; Int 13h function AH=3h, Write Disk Sectors.

;

; AH3h_HandlerForWriteDiskSectors

; Parameters:

; AL, CX, DH, ES: Same as in INTPACK

; DL: Translated Drive number

; DS:DI: Ptr to DPT (in RAMVARS segment)

; SS:BP: Ptr to IDEREGS_AND_INTPACK

; Parameters on INTPACK:

; AL: Number of sectors to write (1...128)

; CH: Cylinder number, bits 7...0

; CL: Bits 7...6: Cylinder number bits 9 and 8

; Bits 5...0: Starting sector number (1...63)

; DH: Starting head number (0...255)

; ES:BX: Pointer to source data

; Returns with INTPACK:

; AH: Int 13h/40h floppy return status

; AL: Number of sectors actually written (only valid if CF set for some BIOSes)

; CF: 0 if successful, 1 if error

;--------------------------------------------------------------------

ALIGN JUMP_ALIGN

AH3h_HandlerForWriteDiskSectors:

call Prepare_BufferToESSIforOldInt13hTransfer

call Prepare_GetOldInt13hCommandIndexToBX

mov ah, [cs:bx+g_rgbWriteCommandLookup]

mov bx, TIMEOUT_AND_STATUS_TO_WAIT(TIMEOUT_DRQ, FLG_STATUS_DRQ)

%ifdef USE_186

push Int13h_ReturnFromHandlerAfterStoringErrorCodeFromAHandTransferredSectorsFromCL

jmp Idepack_TranslateOldInt13hAddressAndIssueCommandFromAH

%else

call Idepack_TranslateOldInt13hAddressAndIssueCommandFromAH

jmp Int13h_ReturnFromHandlerAfterStoringErrorCodeFromAHandTransferredSectorsFromCL

%endif

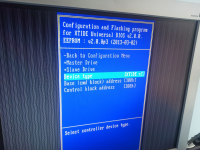

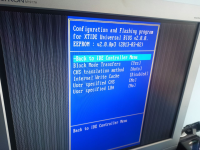

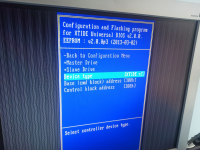

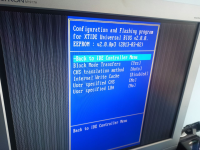

Finally, I'm going to show the

XTIDE BIOS configuration I'm using (by the way, tested with

beta 2.0.3 and also with

r624:

Before I try to understand the ins and outs of how this interrupt implementation works at the lowest level and how it might affect the board design, does anyone have any suggestions as to where this might go?

Thank you very much in advance,

Aitor (spark2k06)