How many people have tried to write an ASCII sentence to execute as machine code?

Sure.. when I wrote my first assembly code and didn't realize I had to make my first instruction a jmp over my db statement. Found that odd (obviously I came from basic though not ML).. of course I was writing this in debug so I guess maybe a compiler would have been smarter and done that for me. Instead (for non assembly programmers) my program which would have looked like:

Code:

db 'Goodbye cruel world!$' ; my string to print

mov ah,9 ;function 9 = print string in memory

mov dx,100 ;where in memory is the string to print

int 21 ;do function 9 with location 100

mov ax,4c00 ;function to exit

int 21 ;do function 4c with return code 00Anyway so my first code was written how I thought it would work like basic, present my variables first but I run it, got garbage and a crash and later found that (wow) it ran my variable as ML instead of seeing my db as an assign statement. I ended up doing a jmp over my db xxxxxxxxx statement so added jmp 117 as my first command and change my pointer to my variable. Yeah the "correct" (better) way would have been to put my variable at the end of my program but since I was writing it as I went I'd have to find out how long my program was and THEN change all my references to variables after the fact to wherever they ended up and if I ever added a line to my program I'd again have to change my references.

That was my old school programming intro but it was fun and while assembly seemed so far out of reach in my mind was really a lot less complex than I had realized at first. With just using helppc at the time for great examples and a list of assembly commands it was quite fun.

I remember hearing somewhere that when Wozniak wrote the monitor code for the Apple II he wrote it in ML instead of assembly just because he was used to it. Later he had to transcribe it I think back to assembly for others to look at but just him coding he wrote it his way.

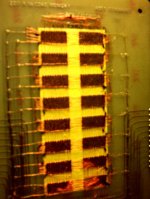

Thanks and yeah the video works now. I was hoping to hear one running but that definitely explains how it works a lot better in my head now. So you'd read/write the bit which is one of those heads? Definitely much more hard drive looking vs memory looking. Non linear memory has always been something I never wrapped my head around. Like how the addressing of a memory core works, I don't quite understand how you read an x,y,z byte and not destroy everything in it's path.

Very interesting and neat to see these things!