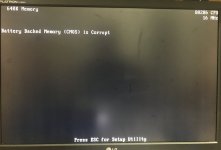

I have been testing and saw some inconsistent exits by the CPU from RESET.

This lead me to believe that for some reason the clock pulse was not stable of not of sufficient level.

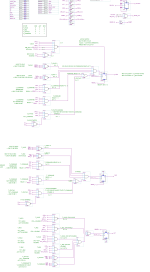

So what I did was to make an addition to the clock generation PCB where I added the 82284, but only to generate a clock signal, nothing else.

So basically I am using the 82284 as a clock generator only, no READY logic, no RESET control.

So inside the CPLD, nothing changed compared to my tests.

The clock pulse from the 82284 output appears to have cleared the inconsistencies of the exit from RESET.

So apparently the clock phases before were not of a consistent duration which leads to the CPU losing synchronization to the clock, which would be undesirable.

I also discovered another fact, the 82284 is able to run clocks of more than 40Mhz because it was oscillating at this frequency on the veroboard setup.

The reason is that the PCB has no traces so there is hardly any stray capacitance on this setup.

I even had to add some 10pF load capacitors in order to get a stable clock from the 82284 output.

This opens up the path to doing the 20Mhz tests again but this time with a normal crystal tied to the inputs of the 82284.

I just need to do this off the mainboard and it should work then. This will enable new tests for later at 18Mhz, 20Mhz, etc.

For now I will keep testing at the proven normal 32Mhz 286_CLK with the 82284 as a simple clock pulse generator, so I can focus my work on the 82284 and 82288 replacement logic, and I will revisit generating and testing the clock in other ways later if and when I have the 82284 and 82288 replacement done.

So, regarding the state and system control replacement logic, apparently the practical situation is different from the design. Which will almost certainly be a timing issue.

So it will be a hard process of many steps to get through, because I can't measure multiple signals, signals inside the CPLD which don't go outside, and I can't compare signals in real time with the cheap equipment I have.

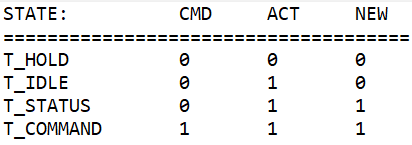

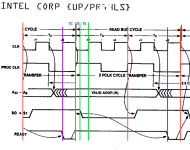

So, what I am doing now is to look at the timing diagram, and going down the process of comparing the theory with the actual situation to check if these signal states are reflected in the signal outputs of the logic. I will use the state machine as a reference where the CPU states are compared with the diagram.

I made a test output pin on the CPLD for which I borrowed the UART clock output.

So I am programming the CPLD each time to output something on this pin, and then measure it.

In that way, I am able to compare coinciding of signal outputs by using an AND gate to decode those signals together in the correct polarities inside the CPLD.

If I measure a response on the test pin, I can know if these signals coincide with each other.

This can allow me to verify sections of the whole logic, and try to trace the issues.

I changed the logic in the CPLD from the equations into positive logic notation equal to the PAL equations as much as possible, to reflect the actual situations that I need to look at.

Since the PAL article contains the state machine probably written in the same logic polarity as inside the PAL, regarding all pins as positive signals (I can't be sure because this is not mentioned), I want to see if in the CPLD the same things occur which are described in the article, and in the timing diagram published by AMD.

So I am now generating separate signals for the IDLE, TS2, TC1 and TC2 state.

And when these are reflected to need to be active in the decoders in some inputs, I substituted the logic in the decoder with the signal names of the T states.

This makes the logic a bit more "readable" for human eyes and it is starting to make more sense.

This does give me more confidence that the concept is sound, not that I doubt Dougs design, but I need references to check against.

So I am verifying sections of the CPU operation and AT functions step by step.

Since the 286 wants to load and execute the BIOS code, it is the first activity that should happen after getting out of RESET.

The 286 CPU is synced to the clock phase of 286_CLK by the logic inside the CPU which is connected with the point of exiting RESET.

That's why it's so very important to both enter and exit RESET at a precise moment during the falling edge of the 286_CLK, called the system clock by Intel.

I want to find out if the CPU is actually doing certain basic operations correctly, so I can have some idea about how viable the system would be with this solution.

Or how to get it into a viable operation of course.

Also I am trying to find out exactly in what area the cause for the missing READY pulses can be found.

The READY mechanism involves a lot of logic which needs to be traced through.

So I need to start at the memory read operation which would trigger everything else in the AT system control logic.

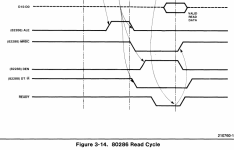

The first step is the T_TS2, the "send status" phase of the 286 CPU cycle.

The CPU exits from IDLE state, it wants to do a memory read operation and sets up the address lines for the correct address it wants, and at the same time it pulls S1 low. (memory instruction or data read).

This is detected by the state machine which decodes this and enters into the T_TS2 state at the next falling edge of 286_CLK.

The ALE signal is raised high and latched by the ALE decoders in the CPLD and this loads the address states of the memory location into the address latches.

So we should see T_TS2 entered while S1 is pulled low and ALE being raised high.

I was able to verify all these events happening simultaneously, so this is good news because the system is at least doing part of the operations correctly.

It would not surprise me if as soon as I solved the READY issue, the whole system may suddenly initialize as well.

So the address lines appear to be setup and latched.

Next step is the T_TC1 state.

I will do measurements on this next, and try to determine of this state is happening correctly.

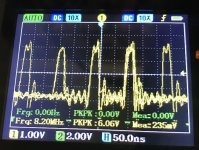

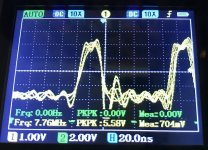

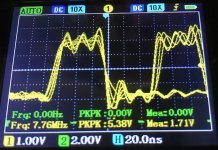

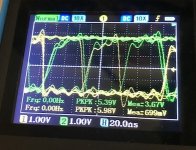

I attached a scope photo of the combined signals: T_TS2 & ALE & S1_n

The scope is of course of poor quality especially at this frequency, but it's possible to make out positive going pulses of roughly 50ns.

The 286_CLK period which covers the T_TS2 phase is 31.25ns so possibly the signals are high during the entire clock period of 286_CLK.

286_CLK clocks the state machine, so if this is active, we can assume the active period is exactly one entire clock length of 286_CLK.

After that the state machine will transition into the T_TC1 state.

I will update the test results as soon as I have more information.

Kind regards,

Rodney

This lead me to believe that for some reason the clock pulse was not stable of not of sufficient level.

So what I did was to make an addition to the clock generation PCB where I added the 82284, but only to generate a clock signal, nothing else.

So basically I am using the 82284 as a clock generator only, no READY logic, no RESET control.

So inside the CPLD, nothing changed compared to my tests.

The clock pulse from the 82284 output appears to have cleared the inconsistencies of the exit from RESET.

So apparently the clock phases before were not of a consistent duration which leads to the CPU losing synchronization to the clock, which would be undesirable.

I also discovered another fact, the 82284 is able to run clocks of more than 40Mhz because it was oscillating at this frequency on the veroboard setup.

The reason is that the PCB has no traces so there is hardly any stray capacitance on this setup.

I even had to add some 10pF load capacitors in order to get a stable clock from the 82284 output.

This opens up the path to doing the 20Mhz tests again but this time with a normal crystal tied to the inputs of the 82284.

I just need to do this off the mainboard and it should work then. This will enable new tests for later at 18Mhz, 20Mhz, etc.

For now I will keep testing at the proven normal 32Mhz 286_CLK with the 82284 as a simple clock pulse generator, so I can focus my work on the 82284 and 82288 replacement logic, and I will revisit generating and testing the clock in other ways later if and when I have the 82284 and 82288 replacement done.

So, regarding the state and system control replacement logic, apparently the practical situation is different from the design. Which will almost certainly be a timing issue.

So it will be a hard process of many steps to get through, because I can't measure multiple signals, signals inside the CPLD which don't go outside, and I can't compare signals in real time with the cheap equipment I have.

So, what I am doing now is to look at the timing diagram, and going down the process of comparing the theory with the actual situation to check if these signal states are reflected in the signal outputs of the logic. I will use the state machine as a reference where the CPU states are compared with the diagram.

I made a test output pin on the CPLD for which I borrowed the UART clock output.

So I am programming the CPLD each time to output something on this pin, and then measure it.

In that way, I am able to compare coinciding of signal outputs by using an AND gate to decode those signals together in the correct polarities inside the CPLD.

If I measure a response on the test pin, I can know if these signals coincide with each other.

This can allow me to verify sections of the whole logic, and try to trace the issues.

I changed the logic in the CPLD from the equations into positive logic notation equal to the PAL equations as much as possible, to reflect the actual situations that I need to look at.

Since the PAL article contains the state machine probably written in the same logic polarity as inside the PAL, regarding all pins as positive signals (I can't be sure because this is not mentioned), I want to see if in the CPLD the same things occur which are described in the article, and in the timing diagram published by AMD.

So I am now generating separate signals for the IDLE, TS2, TC1 and TC2 state.

And when these are reflected to need to be active in the decoders in some inputs, I substituted the logic in the decoder with the signal names of the T states.

This makes the logic a bit more "readable" for human eyes and it is starting to make more sense.

This does give me more confidence that the concept is sound, not that I doubt Dougs design, but I need references to check against.

So I am verifying sections of the CPU operation and AT functions step by step.

Since the 286 wants to load and execute the BIOS code, it is the first activity that should happen after getting out of RESET.

The 286 CPU is synced to the clock phase of 286_CLK by the logic inside the CPU which is connected with the point of exiting RESET.

That's why it's so very important to both enter and exit RESET at a precise moment during the falling edge of the 286_CLK, called the system clock by Intel.

I want to find out if the CPU is actually doing certain basic operations correctly, so I can have some idea about how viable the system would be with this solution.

Or how to get it into a viable operation of course.

Also I am trying to find out exactly in what area the cause for the missing READY pulses can be found.

The READY mechanism involves a lot of logic which needs to be traced through.

So I need to start at the memory read operation which would trigger everything else in the AT system control logic.

The first step is the T_TS2, the "send status" phase of the 286 CPU cycle.

The CPU exits from IDLE state, it wants to do a memory read operation and sets up the address lines for the correct address it wants, and at the same time it pulls S1 low. (memory instruction or data read).

This is detected by the state machine which decodes this and enters into the T_TS2 state at the next falling edge of 286_CLK.

The ALE signal is raised high and latched by the ALE decoders in the CPLD and this loads the address states of the memory location into the address latches.

So we should see T_TS2 entered while S1 is pulled low and ALE being raised high.

I was able to verify all these events happening simultaneously, so this is good news because the system is at least doing part of the operations correctly.

It would not surprise me if as soon as I solved the READY issue, the whole system may suddenly initialize as well.

So the address lines appear to be setup and latched.

Next step is the T_TC1 state.

I will do measurements on this next, and try to determine of this state is happening correctly.

I attached a scope photo of the combined signals: T_TS2 & ALE & S1_n

The scope is of course of poor quality especially at this frequency, but it's possible to make out positive going pulses of roughly 50ns.

The 286_CLK period which covers the T_TS2 phase is 31.25ns so possibly the signals are high during the entire clock period of 286_CLK.

286_CLK clocks the state machine, so if this is active, we can assume the active period is exactly one entire clock length of 286_CLK.

After that the state machine will transition into the T_TC1 state.

I will update the test results as soon as I have more information.

Kind regards,

Rodney

Attachments

Last edited: