I took a 40Mhz normal crystal from a VGA card to test if the 12Mhz UM82C284-12 could oscillate at this frequency, however this didn't work.

The resulting internal clock pulse of the CPU went down to one third of what it should be, 6,66 Mhz.

This may be related to the crystal, or the frequency simply being too high for this type of oscillator circuit as well. I will try other crystals as well as soon as I receive some more. The problem is that when the frequency is raised, the value needed for load capacitance on the crystal drops below the PCB stray capacitance. So we need something like negative capacitance in a circuit, which may possibly involve a coil or needs an additional amplifier which would then start to look more like the crystal oscillator chip solution again. The other issue is that my cheap oscilloscope is not able to show any useful information about 40Mhz clock pulses for me to be able to really see the clock pulse wave shape quality. At the higher speeds, either all clocks look the same, or when looking in detail, I get a lot of distortion. So I am left with only being able to test different things and then observing the resulting system.

I read a lot of generic documentation about clock generators which show all sorts of circuits however it's not very useful information in a practical sense for applying in this project. I did see some coil information with really tiny values like in the pH range. Maybe by using some circuits which use a series coil, I could be able to get the crystal inputs themselves to oscillate with a normal 40Mhz crystal, so not by using a oscillator IC. Or if I can find a 40Mhz crystal which has much better specifications it may also work with the 4pF PCB stray capacitance loading it. I have thought about disconnecting the 82284 crystal pins from the PCB and soldering the crystal pins directly to the chip, which is a bit crazy but I may try it later. I mean, if it works, that would be at least something to test with.

How the clock is generated in the 82284 is a really sensitive thing at much higher clock speeds like 20Mhz, so results may vary depending on how it is done, and I will try different methods to see how they compare. I have ordered a range of normal "fundamental mode" crystals from different suppliers. Anyway, when I use a crystal oscillator chip on the X2 input, I do need to load the 82284 crystal pins with some larger capacitors like 18pf to have any chance of a POST. I hope I can improve this situation by trying alternative means of getting the 82284 to oscillate normally with a crystal using its internal oscillator circuits.

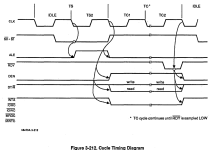

I am trying to compile a lot of information about the 82284 chip from various documentation and timing diagrams, and attempting to translate everything into actual circuits in order to assemble a equivalent schematic of the 82284. This is kind of tricky work because there is a lot of synchronization and delaying etc going on inside the chip, and not all situations are treated equal so this needs some additional circuits to evaluate the CPU cycle state condition. Also testing this is kind of dangerous and can seriously fry a system if there is an error. At the very least this will destroy the transceivers involved, if not more, which we don't want to happen. So I will need to do a lot of testing by comparison with the outputs with an actual 82284 to see if I am getting anywhere close enough to attempt testing it in the AT system.

Using the 82284 equivalent logic combined with additional decoding can subsequently also be used to control a 386 CPU in a 286 AT system, and most likely this also applies to the 486 CPU. The status and control output pins of the 386 and 486 can be translated back to generate the equivalent signals of the 286. This is also mentioned in some VLSI datasheets where they provide some PAL equations for this. Basically I think it also can be done by simply looking at what the status pins represent and translating this, though there may be some timing issues as well. I will also analyze the VLSI PAL data further if I decide to look at a 486 system, it may provide some further clues about what they found in their test work. For the final circuit of a 486 recreation I would probably use a combination of translating the status pins into 286 compatible outputs and using the 82284/82288 equivalent circuits, starting with a 486SLC type system which is close to the 16 bit 386SX. When everything is translated into normal circuits, possibly the logic can be reduced somewhat. So when we try to recreate a 486 system, it will be required to deeply analyze the CPU timing in full and complete detail until everything is completely known. The advantage is however that this knowledge may help to even further tweak the timing and may yield even higher processing speeds.

When I have been able to piece something together worth testing as an initial 82284 replacement, I will program it into a ATF1508AS CPLD and test it out to see if it could be functional. Replacing the 82284 will definitely help to improve the timing at 20Mhz and beyond when we can change the logic and use the much faster CPLD chip. Of course, I will share all the circuits and ideas here as soon as I have something functional resulting from my tests.

Kind regards,

Rodney

The resulting internal clock pulse of the CPU went down to one third of what it should be, 6,66 Mhz.

This may be related to the crystal, or the frequency simply being too high for this type of oscillator circuit as well. I will try other crystals as well as soon as I receive some more. The problem is that when the frequency is raised, the value needed for load capacitance on the crystal drops below the PCB stray capacitance. So we need something like negative capacitance in a circuit, which may possibly involve a coil or needs an additional amplifier which would then start to look more like the crystal oscillator chip solution again. The other issue is that my cheap oscilloscope is not able to show any useful information about 40Mhz clock pulses for me to be able to really see the clock pulse wave shape quality. At the higher speeds, either all clocks look the same, or when looking in detail, I get a lot of distortion. So I am left with only being able to test different things and then observing the resulting system.

I read a lot of generic documentation about clock generators which show all sorts of circuits however it's not very useful information in a practical sense for applying in this project. I did see some coil information with really tiny values like in the pH range. Maybe by using some circuits which use a series coil, I could be able to get the crystal inputs themselves to oscillate with a normal 40Mhz crystal, so not by using a oscillator IC. Or if I can find a 40Mhz crystal which has much better specifications it may also work with the 4pF PCB stray capacitance loading it. I have thought about disconnecting the 82284 crystal pins from the PCB and soldering the crystal pins directly to the chip, which is a bit crazy but I may try it later. I mean, if it works, that would be at least something to test with.

How the clock is generated in the 82284 is a really sensitive thing at much higher clock speeds like 20Mhz, so results may vary depending on how it is done, and I will try different methods to see how they compare. I have ordered a range of normal "fundamental mode" crystals from different suppliers. Anyway, when I use a crystal oscillator chip on the X2 input, I do need to load the 82284 crystal pins with some larger capacitors like 18pf to have any chance of a POST. I hope I can improve this situation by trying alternative means of getting the 82284 to oscillate normally with a crystal using its internal oscillator circuits.

I am trying to compile a lot of information about the 82284 chip from various documentation and timing diagrams, and attempting to translate everything into actual circuits in order to assemble a equivalent schematic of the 82284. This is kind of tricky work because there is a lot of synchronization and delaying etc going on inside the chip, and not all situations are treated equal so this needs some additional circuits to evaluate the CPU cycle state condition. Also testing this is kind of dangerous and can seriously fry a system if there is an error. At the very least this will destroy the transceivers involved, if not more, which we don't want to happen. So I will need to do a lot of testing by comparison with the outputs with an actual 82284 to see if I am getting anywhere close enough to attempt testing it in the AT system.

Using the 82284 equivalent logic combined with additional decoding can subsequently also be used to control a 386 CPU in a 286 AT system, and most likely this also applies to the 486 CPU. The status and control output pins of the 386 and 486 can be translated back to generate the equivalent signals of the 286. This is also mentioned in some VLSI datasheets where they provide some PAL equations for this. Basically I think it also can be done by simply looking at what the status pins represent and translating this, though there may be some timing issues as well. I will also analyze the VLSI PAL data further if I decide to look at a 486 system, it may provide some further clues about what they found in their test work. For the final circuit of a 486 recreation I would probably use a combination of translating the status pins into 286 compatible outputs and using the 82284/82288 equivalent circuits, starting with a 486SLC type system which is close to the 16 bit 386SX. When everything is translated into normal circuits, possibly the logic can be reduced somewhat. So when we try to recreate a 486 system, it will be required to deeply analyze the CPU timing in full and complete detail until everything is completely known. The advantage is however that this knowledge may help to even further tweak the timing and may yield even higher processing speeds.

When I have been able to piece something together worth testing as an initial 82284 replacement, I will program it into a ATF1508AS CPLD and test it out to see if it could be functional. Replacing the 82284 will definitely help to improve the timing at 20Mhz and beyond when we can change the logic and use the much faster CPLD chip. Of course, I will share all the circuits and ideas here as soon as I have something functional resulting from my tests.

Kind regards,

Rodney

Last edited: