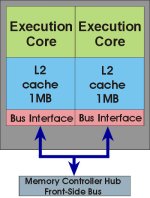

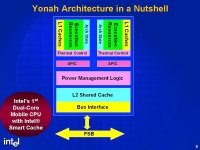

Multi-core doesn't mean all of the cores are on the same die.

Sure, I'm aware of that. And as you note, in some instances even when they *are* on the same die it's still topologically the same as if it were two separate packages linked only by the FSB. So... sure, I guess I'm guilty of oversimplifying, but the point I was responding to was a claim that two separate Pentium IIIs is somehow "better" than a "dual-core" CPU

because they're "separate".

In the very worst case they're exactly the same, and a modern multi-core system with more tightly integrated caches is likely to be better.

You won't see it in benchmarks and it doesn't make sense on paper, but there are performance advantages you'll see in day-to-day usage. If you don't believe me, build one yourself sometime and give it a whirl.

Ever hear of something called the "placebo effect"? If you don't see it in benchmarks it's not real, sorry. And there's no need, I have had plenty of dual socket systems, going back to an ABIT BP6 dual Celeron, circa 1999, iterating through "industrial strength" Serverworks-based Pentium III and P4 Xeons, and in fact right now I have both an old Mac Pro tower and a gnarly 2018-ish vintage Lenovo dual-socket Xeon with a gawdawful total number of cores within swift-kicking range right now.

They might be innately somehow "cooler" than a mere single socket system, but if that single socket has as many or more cores in it it's probably objectively better. Is the argument here that the extra sockets are effectively "speed holes"?

The performance-gain comes from being able to dedicate 1 CPU to the operating system and 1 CPU to the program being run.

No. Look up how Windows NT works. Windows multiprocessing is *entirely* about the kernel scheduler picking CPUs to run threads on, and threading has been part of Win32 since the beginning. (With the exception of the "Win32s" subset that gave Windows 3.11 limited 32-bit powers.) Even plain old Windows 95 encourages programmers to use threads to spin off tasks that are supposed to run concurrently instead of trying to implement said concurrency themselves cooperatively, and thus a well-written Windows 95 program can, at least in theory, gain something from running on NT. The asterisk here is that depending on Windows to "auto-magically" handle scheduling your threads wasn't necessarily the most performant or predictable way of doing it, which is a good reason why games in particular might avoid it and just depend on a monolithic CPU-hogging process.

"Photoshop" was cited earlier as an early example of an "multiprocessing aware" program, but I think when people chuck this out there they might be getting confused about the differences between the Macintosh and Windows versions. Back in the mid-1990's Apple sold a few multi-CPU Power Macs well before OS X came out, and the pre-OS X consumer Macintosh OS wasn't even really a (preemptive)

multitasking operating system, let alone a multiprocessor-aware one. To to utilize the additional CPUs they came up with a crude hack that allowed the secondary CPU(s) to essentially be used vaguely similarly to how you might use a GPU today, IE, you could write your program to spin off specific tasks that can use that extra CPU as an accelerator. You can "properly" say that for instances like this you have one cpu "dedicated" to the program that's being run and the other "dedicated" the operating system, but even that isn't really true, because in the Mac case there still needs to be a lot of the "MP aware" program running in the "main" OS space. Windows

never worked this way.

Adobe *was* one of the first companies to specifically tout Windows NT's multiprocessing support, but their first 32 bit version of Photoshop (which ran on both NT and 95) "just" took advantage to the Threading support Microsoft added to the API and therefore left it up to the OS to schedule its subtasks like a modern OS would. In other words, it was written the same way you'd write it today; thread it up and leave it to the OS to decide where the threads go. The only thing that's going to be different when running this program on a multi-CPU machine instead of a single CPU is the program might opt to spin up more threads in parallel to accomplish a given task if it thinks there are more CPUs, but ultimately it's up to the OS if it decides to drop them all on the same CPU because it has other things viing for its attention.

There certainly might be some discussions to be had about the merits of "single fast CPU vs. two slower ones", and there's also a whole rabbit hole you can fall down when discussing the evolution of multiprocessing support in various operating systems (in terms of interrupt handling, fine-grained locking, etc), but, no, Windows NT-based multiprocessing was never as simple as "two CPUs, your 'main' program gets one and the OS gets the other!".

Edit: And per

@GiGaBiTe's complaining about NUMA, that's a valid example of both why two sockets might be *worse* than one, and why the evolution of operating systems *does* matter. In the late 2000-aughts my work workstation was "upgraded" from a Mac Pro, in which all the cores are symmetric, to a dual-socket AMD system that had twice as many (supposedly faster) cores, but, yes, NUMA support kind of sucked at the time and that machine would randomly bog down compared to the older Mac. Shortly before I ditched that machine for the next one iterative Linux upgrades had significantly improved it. I am far less familiar with the evolution of the NT kernel when it comes to multiprocessing support, but I do remember, for instance, Microsoft crowing about there being significant improvements in the process scheduler with NT 7.0...