voidstar78

Veteran Member

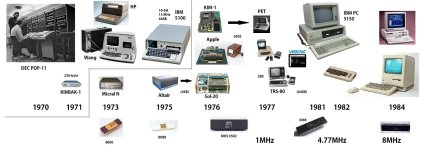

I had in mind a kind of "mural" of the origins of the personal computer. Attached below is a concept/prototype of that thought.

I don't intend to debate about which computer was "first." Just like cars, airplanes, pianos - any engineered product, there was a series of prototypes and refinements, then eventually a final general-form emerged. I once saw a drawing of the first steam engine train i nthe 1700s, some kind of tractor looking thing that didn't have a track yet to run on! Was it really a train yet? And to me, in a way, I kind of consider upright pianos as the first personal computer - it has a keyboard input, the sheet music is the software, and the duration of the string vibrations is the memory. In any case, "first" isn't that important and is very subjective.

And also, there was certainly a lot of pioneering hardware and software work prior to the 1970s that all led up to this point of a "personal digital computer". A computer, of course, "computes" - but a computer has become more than just a numerical calculator. Computers can execute programs, and the concepts of expressing a program and debugging a program were pioneered throughout the 1950s (FORTRAN, COBOL, and the concept of "debugging" and system hooks to let you step through the instructions).

To me, the "personal" part of the PC means: (1) it can be bought/financed by an individual, (2) can be installed/setup by an individual, and (3) used/operated by an individual.

The PDP was a bit before my time, so I don't know that much about them. I've seen the PDP-1 in operation, and once one of those was purchased and setup, I see it can be operated by an individual. So I can certainly agree it is a form of personal computer -- but practically speaking, I don't think it could have been afforded (and then maintained) by an individual. I've seen images of PDP-8's fitting in the back seat of cars, so in a sense they are portable. And across the 1960s, it seems the price of the PDPs went from ~$120k down to ~$10k (depending on accessories and options, I'm sure).

Then there is the Wang 2200/HP9830/IBM 5100... In parallel is the Kinbak, Micral, Altair. I draw a line between these systems (and the Kinbak) marking the difference between "classic" TTL, and "off the shelf components." Of course that distinction isn't exactly clear cut. But view it this way: we can make modern replicas of the Altair, but we would struggle to make a replica of the IBM 5100 (and its famous "tincans"). And there are emulators of the Wang 2200 (I think only as far back as the "B" model), but I don't think anyone is up for replicating its proprietary processor.

And the Micral N is interesting, as it was developed in France by a Vietnamese-born citizen. Remember, Vietnam was a French colony for decades, there is a lot of French culture influence in Vietnam. And my understanding it is the French who "helped" the Vietnamese transition from traditional Asian-style written letters, to more Latin-style symbols. [ interesting side note: the first photograph is said to have come from France. ] Did the concept of the Micral N make its way over to the folks at MOS? Or, rather like the light bulb and airplane, were they sort of independently developed in a couple places at nearly the same time?

The rest in my mural: about the KIM-1, Altair, Apple, and eventual IBM PC is all fairly well known and discussed. I don't mean to downplay the significant of the Altair - my view is the Sol-20 represents the epitome of what an Altair kit could become (much of those accessories wasn't readily available, since it just couldn't be built fast enough or had quality issues)? My only real issue with the Sol-20, is that BASIC had to be loaded, it wasn't built in. That understandable with a 4K system (especially if it was designed more to be a terminal frontend), but I think it does make a distinction between "still a kit" vs "a desktop appliance" that anyone can sit down and use right away.

Then of course the 1977 Trinity. 1978 was a lot of execution on buy-orders of the Trinity, and a mad-dash towards making an affordable disk drive system - which was starting to become fairly standard by 1979/1980. Then even by 1982, 64KB systems were still common. But then IBM changed all that, with multiple segments of 16-bit, and a simple OS that made all that seem seamless.

I saw a video once of the Commodore C64 production line (in Germany), which to me seemed very sophisticated for early 1980s. I'm not sure if the PDP, Wang, or IBM 5100 had that kind of large-scale production formality (clean rooms and automated testing) - maybe someone here has insight about that? Plus how much of pre-1977 systems were "hand-built"? (the Apple-1 for sure was "hand built", no automated assembly line).

It was that process in getting to that single mainboard design that made the large-scale production possible, which did require some ingenuity, but also for the industry to scale up and make available the necessary chips in quantity.

And I could have stopped at 1982.. But I wanted to show the 1984 highlights: Tandy 1000 SX and the Macintosh. Although IIRC, Macintosh sales struggled compared to the ][gs? But the point in including those is that the "meta" of PCs had been established, and that overall design of a desktop personal PC has stood for 40+ years (detached keyboard, central CPU box with expansion cards, and a video display). From 1984 on, the industry explodes out with many refinements on that formula (especially in portable PCs).

So in terms of "first personal computer" - if you needed to make some machine to control another industrial machine, the Micral N may have been able to do it (with recognizable instructions and external IO; I'm not sure if any were sold internationally, so you'd have to read the operating manual in French probably). But for a system that your mother could sit down and store and recall recipes -- well, a typical home couldn't fit a PDP. And a Wang or IBM 5100 might be up to that task, except at $7500+ they also weren't very affordable -- and I don't recall coming across any verified production numbers of those early systems.

The IBM 5100 is sort of the "last of an era" in that the 5100 is like all the components of a previous-decades mainframes, packaged up into a desktop unit. But the 5100 has all the merits of, say, the Commodore PET: built in screen, keyboard, storage device, can be coded into a generous 64KB space.

Lastly, I wanted to note about VisiCalc in 1979 - as a very pioneering "killer app" that really woke things up, in terms of what these microcomputers could be used (even if they only had 16KB, they could keep track of local team scores, and other small group needs). There was some digital music production going on, some industrial automation work going on (like controlling lights in a theater production), but image processing hadn't yet come of age. But now with the line-printer replaced with a CRT, the "visible calculator" (VisiCalc) is still extremely useful to this day. Then it was on to accounting, payroll, patient databases -- all the stuff big mainframes had been doing for insurance companies, now small companies could enjoy that software.

There are lots of systems missing (CoCo, Amiga), but wondering how folks think of this "mural"?

I don't intend to debate about which computer was "first." Just like cars, airplanes, pianos - any engineered product, there was a series of prototypes and refinements, then eventually a final general-form emerged. I once saw a drawing of the first steam engine train i nthe 1700s, some kind of tractor looking thing that didn't have a track yet to run on! Was it really a train yet? And to me, in a way, I kind of consider upright pianos as the first personal computer - it has a keyboard input, the sheet music is the software, and the duration of the string vibrations is the memory. In any case, "first" isn't that important and is very subjective.

And also, there was certainly a lot of pioneering hardware and software work prior to the 1970s that all led up to this point of a "personal digital computer". A computer, of course, "computes" - but a computer has become more than just a numerical calculator. Computers can execute programs, and the concepts of expressing a program and debugging a program were pioneered throughout the 1950s (FORTRAN, COBOL, and the concept of "debugging" and system hooks to let you step through the instructions).

To me, the "personal" part of the PC means: (1) it can be bought/financed by an individual, (2) can be installed/setup by an individual, and (3) used/operated by an individual.

The PDP was a bit before my time, so I don't know that much about them. I've seen the PDP-1 in operation, and once one of those was purchased and setup, I see it can be operated by an individual. So I can certainly agree it is a form of personal computer -- but practically speaking, I don't think it could have been afforded (and then maintained) by an individual. I've seen images of PDP-8's fitting in the back seat of cars, so in a sense they are portable. And across the 1960s, it seems the price of the PDPs went from ~$120k down to ~$10k (depending on accessories and options, I'm sure).

Then there is the Wang 2200/HP9830/IBM 5100... In parallel is the Kinbak, Micral, Altair. I draw a line between these systems (and the Kinbak) marking the difference between "classic" TTL, and "off the shelf components." Of course that distinction isn't exactly clear cut. But view it this way: we can make modern replicas of the Altair, but we would struggle to make a replica of the IBM 5100 (and its famous "tincans"). And there are emulators of the Wang 2200 (I think only as far back as the "B" model), but I don't think anyone is up for replicating its proprietary processor.

And the Micral N is interesting, as it was developed in France by a Vietnamese-born citizen. Remember, Vietnam was a French colony for decades, there is a lot of French culture influence in Vietnam. And my understanding it is the French who "helped" the Vietnamese transition from traditional Asian-style written letters, to more Latin-style symbols. [ interesting side note: the first photograph is said to have come from France. ] Did the concept of the Micral N make its way over to the folks at MOS? Or, rather like the light bulb and airplane, were they sort of independently developed in a couple places at nearly the same time?

The rest in my mural: about the KIM-1, Altair, Apple, and eventual IBM PC is all fairly well known and discussed. I don't mean to downplay the significant of the Altair - my view is the Sol-20 represents the epitome of what an Altair kit could become (much of those accessories wasn't readily available, since it just couldn't be built fast enough or had quality issues)? My only real issue with the Sol-20, is that BASIC had to be loaded, it wasn't built in. That understandable with a 4K system (especially if it was designed more to be a terminal frontend), but I think it does make a distinction between "still a kit" vs "a desktop appliance" that anyone can sit down and use right away.

Then of course the 1977 Trinity. 1978 was a lot of execution on buy-orders of the Trinity, and a mad-dash towards making an affordable disk drive system - which was starting to become fairly standard by 1979/1980. Then even by 1982, 64KB systems were still common. But then IBM changed all that, with multiple segments of 16-bit, and a simple OS that made all that seem seamless.

I saw a video once of the Commodore C64 production line (in Germany), which to me seemed very sophisticated for early 1980s. I'm not sure if the PDP, Wang, or IBM 5100 had that kind of large-scale production formality (clean rooms and automated testing) - maybe someone here has insight about that? Plus how much of pre-1977 systems were "hand-built"? (the Apple-1 for sure was "hand built", no automated assembly line).

It was that process in getting to that single mainboard design that made the large-scale production possible, which did require some ingenuity, but also for the industry to scale up and make available the necessary chips in quantity.

And I could have stopped at 1982.. But I wanted to show the 1984 highlights: Tandy 1000 SX and the Macintosh. Although IIRC, Macintosh sales struggled compared to the ][gs? But the point in including those is that the "meta" of PCs had been established, and that overall design of a desktop personal PC has stood for 40+ years (detached keyboard, central CPU box with expansion cards, and a video display). From 1984 on, the industry explodes out with many refinements on that formula (especially in portable PCs).

So in terms of "first personal computer" - if you needed to make some machine to control another industrial machine, the Micral N may have been able to do it (with recognizable instructions and external IO; I'm not sure if any were sold internationally, so you'd have to read the operating manual in French probably). But for a system that your mother could sit down and store and recall recipes -- well, a typical home couldn't fit a PDP. And a Wang or IBM 5100 might be up to that task, except at $7500+ they also weren't very affordable -- and I don't recall coming across any verified production numbers of those early systems.

The IBM 5100 is sort of the "last of an era" in that the 5100 is like all the components of a previous-decades mainframes, packaged up into a desktop unit. But the 5100 has all the merits of, say, the Commodore PET: built in screen, keyboard, storage device, can be coded into a generous 64KB space.

Lastly, I wanted to note about VisiCalc in 1979 - as a very pioneering "killer app" that really woke things up, in terms of what these microcomputers could be used (even if they only had 16KB, they could keep track of local team scores, and other small group needs). There was some digital music production going on, some industrial automation work going on (like controlling lights in a theater production), but image processing hadn't yet come of age. But now with the line-printer replaced with a CRT, the "visible calculator" (VisiCalc) is still extremely useful to this day. Then it was on to accounting, payroll, patient databases -- all the stuff big mainframes had been doing for insurance companies, now small companies could enjoy that software.

There are lots of systems missing (CoCo, Amiga), but wondering how folks think of this "mural"?

Last edited: