falter

Veteran Member

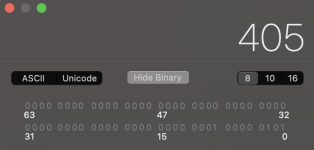

I've been working on a video about my Altair and goofed. I was doing the math program from the example guide and added 1+1.. which worked swimmingly. Then I thought I'd try adding 2 and 3. Unbeknownst to me, I had accidentally left the left most data switch on (from when I was examining memory address, octal 202) and deposited 202 and 203 as the numbers to be added. This would equal 405, but the Altair only goes up to 377 - so it came up with 005 on the data display. Just a curiosity question, what did it do here to arrive at that result? What happens to numbers that go over 377?